“Shaping the Future of Education: Exploring the Potential and Consequences of AI and ChatGPT in Educational Settings” by Simone Grassini is a well-researched, concise but comprehensive overview of the state of play for generative AI (GAI) in education. It gives a very good overview of current uses, by faculty and students, and provides a thoughtful discussion of issues and concerns arising. It addresses technical, ethical, and pragmatic concerns across a broad spectrum. If you want a great summary of where we are now, with tons of research-informed suggestions as to what to do about it, this is a very worthwhile read.

“Shaping the Future of Education: Exploring the Potential and Consequences of AI and ChatGPT in Educational Settings” by Simone Grassini is a well-researched, concise but comprehensive overview of the state of play for generative AI (GAI) in education. It gives a very good overview of current uses, by faculty and students, and provides a thoughtful discussion of issues and concerns arising. It addresses technical, ethical, and pragmatic concerns across a broad spectrum. If you want a great summary of where we are now, with tons of research-informed suggestions as to what to do about it, this is a very worthwhile read.

However, underpinning much of the discussion is an implied (and I suspect unintentional) assumption that education is primarily concerned with achieving and measuring explicit specified outcomes. This is particularly obvious in the discussions of ways GAIs can “assist” with instruction. I have a problem with that.

There has been an increasing trend in recent decades towards the mechanization of education: modularizing rather than integrating, measuring what can be easily measured, creating efficiencies, focusing on an end goal of feeding industry, and so on. It has resulted in a classic case of the McNamara Fallacy, that starts with a laudable goal of measuring success, as much as we are able, and ends with that measure defining success, to the exclusion anything we do not or cannot measure. Learning becomes the achievement of measured outcomes.

It is true that consistent, measurable, hard techniques must be learned to achieve almost anything in life, and that it takes sustained effort and study to achieve most of them that educators can and should help with. Measurable learning outcomes and what we do with them matter. However, the more profound and, I believe, the more important ends of education, regardless of the subject, are concerned with ways of being in the world, with other humans. It is the tacit curriculum that ultimately matters more: how education affects the attitudes, the values, the ways we can adapt, how we can create, how we make connections, pursue our dreams, live fulfilling lives, engage with our fellow humans as parts of cultures and societies.

By definition, the tacit curriculum cannot be meaningfully expressed in learning outcomes or measured on a uniform scale. It can be expressed only obliquely, if it can be expressed at all, in words. It is largely emergent and relational, expressed in how we are, interacting with one another, not as measurable functions that describe what we can do. It is complex, situated, and idiosyncratic. It is about learning to be human, not achieving credentials.

Returning to the topic of AI, to learn to be human from a blurry JPEG of the web, or autotune for knowledge, especially given the fact that training sets will increasingly be trained on the output of earlier training sets, seems to me to be a very bad idea indeed.

The real difficulty that teachers face is not that students solve the problems set to them using large language models, but that in so doing they bypass the process, thus avoiding the tacit learning outcomes we cannot or choose not to measure. And the real difficulty that those students face is that, in delegating the teaching process to an AI, their teachers are bypassing the teaching process, thus failing to support the learning of those tacit outcomes or, at best, providing an averaged-out caricature of them. If we heedlessly continue along this path, it will wind up with machines teaching machines, with humans largely playing the roles of cogs and switches in them.

Some might argue that, if the machines do a good enough job of mimicry then it really doesn’t matter that they happen to be statistical models with no feelings, no intentions, no connection, and no agency. I disagree. Just as it makes a difference whether a painting ascribed to Picasso is a fake or not, or whether a letter is faxed or delivered through the post, or whether this particular guitar was played by John Lennon, it matters that real humans are on each side of a learning transaction. It means something different for an artifact to have been created by another human, even if the form of the exchange, in words or whatever, is the same. Current large language models have flaws, confidently spout falsehoods, fail to remember previous exchanges, and so on, so they are easy targets for criticism. However, I think it will be even worse when AIs are “better” teachers. When what they seem to be is endlessly tireless, patient, respectful and responsive; when the help they give is unerringly accurately personal and targeted; when they accurately draw on knowledge no one human could ever possess, they will not be modelling human behaviour. The best case scenario is that they will not be teaching students how to be, they will just be teaching them how to do, and that human teachers will provide the necessary tacit curriculum to support the human side of learning. However, the two are inseparable, so that is not particularly likely. The worst scenarios are that they will be teaching students how to be machines, or how to be an average human (with significant biases introduced by their training), or both.

And, frankly, if AIs are doing such a good job of it then they are the ones who should be doing whatever it is that they are training students to do, not the students. This will most certainly happen: it already is (witness the current actors and screenwriters strike). For all the disruption that results, it’s not necessarily a bad thing, because it increases the adjacent possible for everyone in so many ways. That’s why the illustration to this post is made to my instructions by Midjourney, not drawn by me. It does a much better job of it than I could do.

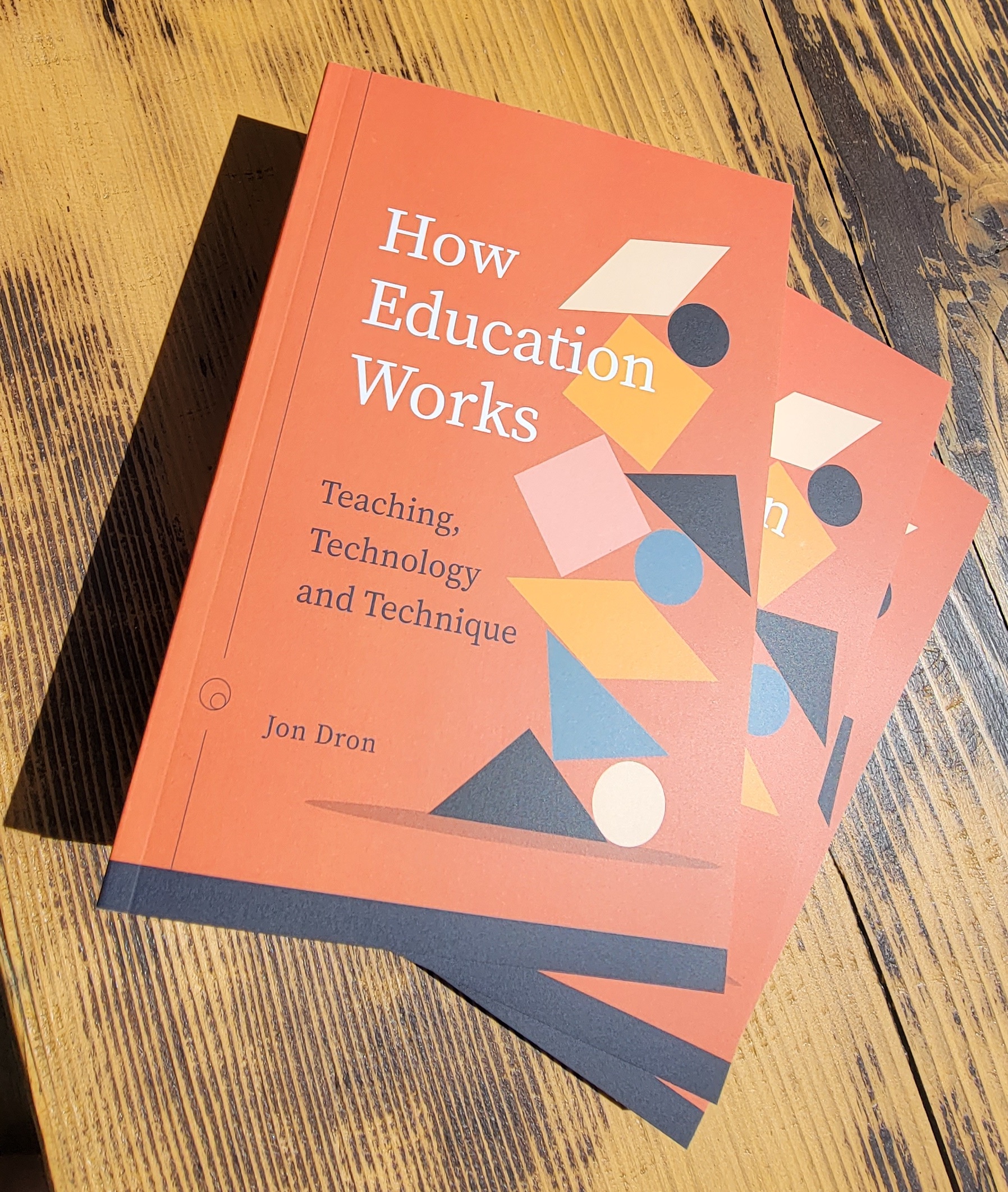

In a rational world we would not simply incorporate AI into teaching as we have always taught. It makes no more sense to let it replace teachers than it does to let it replace students. We really need to rethink what and why we are teaching in the first place. Unfortunately, such reinvention is rarely if ever how technology works. Technology evolves by assembly with and in the context of other technology, which is how come we have inherited mediaeval solutions to indoctrination as a fundamental mainstay of all modern education (there’s a lot more about such things in my book, How Education Works if you want to know more about that). The upshot will be that, as we integrate rather than reinvent, we will keep on doing what we have always done, with a few changes to topics, a few adjustments in how we assess, and a few “efficiencies”, but we will barely notice that everything has changed because students will still be achieving the same kinds of measured outcomes.

I am not much persuaded by most apocalyptic visions of the potential threat of AI. I don’t think that AI is particularly likely to lead to the world ending with a bang, though it is true that more powerful tools do make it more likely that evil people will wield them. Artificial General Intelligence, though, especially anything resembling consciousness, is very little closer today than it was 50 years ago and most attempts to achieve it are barking in the wrong forest, let alone up the wrong tree. The more likely and more troubling scenario is that, as it embraces GAIs but fails to change how everything is done, the world will end with a whimper, a blandification, a leisurely death like that of lobsters in water coming slowly to a boil. The sad thing is that, by then, with our continued focus on just those things we measure, we may not even notice it is happening. The sadder thing still is that, perhaps, it already is happening.

Originally posted at: https://landing.athabascau.ca/bookmarks/view/19390937/the-artificial-curriculum

These are the slides from my opening keynote at SITE ‘24 today, at Planet Hollywood in Las Vegas. The talk was based closely on some of the main ideas in How Education Works. I’d written an over-ambitious abstract promising answers to many questions and concerns, that I did just about cover but far too broadly. For counter balance, therefore, I tried to keep the focus on a single message – t’aint what you do, it’s the way that you do it (which is the epigraph for the book) – and, because it was Vegas, I felt that I had to do a show, so I ended the session with a short ukulele version of the song of that name. I had fun, and a few people tried to sing along. The keynote conversation that followed was most enjoyable – wonderful people with wonderful ideas, and the hour allotted to it gave us time to explore all of them.

These are the slides from my opening keynote at SITE ‘24 today, at Planet Hollywood in Las Vegas. The talk was based closely on some of the main ideas in How Education Works. I’d written an over-ambitious abstract promising answers to many questions and concerns, that I did just about cover but far too broadly. For counter balance, therefore, I tried to keep the focus on a single message – t’aint what you do, it’s the way that you do it (which is the epigraph for the book) – and, because it was Vegas, I felt that I had to do a show, so I ended the session with a short ukulele version of the song of that name. I had fun, and a few people tried to sing along. The keynote conversation that followed was most enjoyable – wonderful people with wonderful ideas, and the hour allotted to it gave us time to explore all of them.